This page is and overview, configuration and tasks for ISC22 Student Cluster Competition teams.

The ICON (ICOsahedral Nonhydrostatic) earth system model is a unified next-generation global numerical weather prediction and climate modelling system. It consists of an atmosphere and an ocean component. The system of equations is solved in a grid point space on a geodesic icosahedral grid, which allows a quasi-isotropic horizontal resolution on the sphere as well as the restriction to regional domains. The primary cells of the grid are triangles resulting from a Delaunay triangulation which in turn allows C-grid type discretization and straight forward local refinement in selected areas. ICON contains parameterization packages for scales from ~100km for long term coupled climate simulations to ~1km for cloud (atm.) or eddy (ocean) resolving regional simulations. ICON has proven to have high scalability while running on the largest German and European HPC machines.

The ICON model has been introduced into DWD's (German Weather Service) operational forecast system in January 2015 and is used in several national and international climate research projects targeting high resolution simulations.

ESIWACE (EU project)

CLICCS (German project)

Monsoon 2.0 (German-Chinese Collaboration)

HD(CP)2 (German project)

Here is the our Introduction to ICON By Dr. Panagiotis (Panos) Adamidis, HPC Group Leader at DKRZ:

And here are the slides:

To obtain the ICON Framework Code you first have to agree upon the terms and conditions of the personal non-commercial research license, see file MPI-M-ICONLizenzvertragV2.6.pdf below.

If you accept the license you need to register an account at DKRZ here https://luv.dkrz.de/projects/newuser/. In case your e-mail is not accepted, you should write an email to support@dkrz.de. We will then activate your e-mail address so that you can register.

After your account has been set up you can login and request membership to project 1273 (ICON at student cluster competition isc22) https://luv.dkrz.de/projects/ask/?project-id=1273. By requesting access you agree with the personal non-commercial research license delivered with the code.

Now you have access to the ICON version, which will be used for the Student Cluster Competition. Go to https://gitlab.dkrz.de/icon-scc-isc22/icon-scc and download the code.

HDF5: https://www.hdfgroup.org/downloads/hdf5/source-code

NETCDF: https://downloads.unidata.ucar.edu/netcdf-c/4.8.1/src/netcdf-c-4.8.1.tar.gz

NETCDFF: https://downloads.unidata.ucar.edu/netcdf-fortran/4.5.4/netcdf-fortran-4.5.4.tar.gz

UCX: https://openucx.org/downloads

CDO: https://code.mpimet.mpg.de/attachments/download/24638/cdo-1.9.10.tar.gz

undefined reference to symbol '__libm_sse2_sincos' error adding symbols: DSO missing from command line If you get the above errors while building CDO, set the following LDFLAGS and rebuild: export LDFLAGS="-Wl,--copy-dt-needed-entries" |

Optional: https://developer.nvidia.com/networking/hpc-x or other MPI

Check this link to learn how to install/use HPC-X, Profiling using IPM and HPC-X

On a new system you can adjust the configuration file config/dkrz/mistral.intel to the new environment.

Sample config file:

#!/bin/bash

set -eu

unset CDPATH

SCRIPT_DIR=$(cd "$(dirname "$0")"; pwd)

ICON_DIR=$(cd "${SCRIPT_DIR}/../.."; pwd)

MODULES=""

SW_ROOT=

HDF5_ROOT=$HDF5_DIR

HDF5_LIBS='-lhdf5'

NETCDF_ROOT=$NETCDF_DIR

NETCDF_LIBS='-lnetcdf'

NETCDFF_ROOT=$NETCDF_DIR

NETCDFF_LIBS='-lnetcdff'

MKL_LDFLAGS='-mkl=sequential'

XML2_ROOT='/usr'

XML2_LIBS='-lxml2'

PYTHON="python2"

################################################################################

AR='ar'

BUILD_ENV=". \"${SCRIPT_DIR}/module_switcher\"; switch_for_module ${MODULES}; export LD_LIBRARY_PATH=\"${HDF5_ROOT}/lib:${NETCDF_ROOT}/lib:${NETCDFF_ROOT}/lib:\${LD_LIBRARY_PATH}\";"

CFLAGS='-gdwarf-4 -O3 -qno-opt-dynamic-align -ftz -march=native -g'

CPPFLAGS="-I${HDF5_ROOT}/include -I${NETCDF_ROOT}/include -I${XML2_ROOT}/include/libxml2"

FCFLAGS="-I${NETCDFF_ROOT}/include -gdwarf-4 -g -march=native -pc64 -fp-model source"

ICON_FCFLAGS='-O2 -assume realloc_lhs -ftz -DDO_NOT_COMBINE_PUT_AND_NOCHECK'

ICON_OCEAN_FCFLAGS='-O3 -assume norealloc_lhs -reentrancy threaded -qopt-report-file=stdout -qopt-report=0 -qopt-report-phase=vec'

LDFLAGS="-L${HDF5_ROOT}/lib -L${NETCDF_ROOT}/lib -L${NETCDFF_ROOT}/lib ${MKL_LDFLAGS}"

LIBS="-Wl,--as-needed ${XML2_LIBS} ${NETCDFF_LIBS} ${NETCDF_LIBS} ${HDF5_LIBS}"

MPI_LAUNCH=mpirun

EXTRA_CONFIG_ARGS='--enable-intel-consistency --enable-vectorized-lrtm --enable-parallel-netcdf'

################################################################################

"${ICON_DIR}/configure" \

AR="${AR}" \

BUILD_ENV="${BUILD_ENV}" \

CC="${CC}" \

CFLAGS="${CFLAGS}" \

CPPFLAGS="${CPPFLAGS}" \

FC="${FC}" \

FCFLAGS="${FCFLAGS}" \

ICON_FCFLAGS="${ICON_FCFLAGS}" \

ICON_OCEAN_FCFLAGS="${ICON_OCEAN_FCFLAGS}" \

LDFLAGS="${LDFLAGS}" \

LIBS="${LIBS}" \

MPI_LAUNCH="${MPI_LAUNCH}" \

PYTHON="${PYTHON}" \

${EXTRA_CONFIG_ARGS} \

"$@"

for arg in "$@"; do

case $arg in

-help | --help | --hel | --he | -h | -help=r* | --help=r* | --hel=r* | --he=r* | -hr* | -help=s* | --help=s* | --hel=s* | --he=s* | -hs*)

test -n "${EXTRA_CONFIG_ARGS}" && echo '' && echo "This wrapper script ('$0') calls the configure script with the following extra arguments, which might override the default values listed above: ${EXTRA_CONFIG_ARGS}"

exit 0 ;;

esac

done

# Copy runscript-related files when building out-of-source:

if test $(pwd) != $(cd "${ICON_DIR}"; pwd); then

echo "Copying runscript input files from the source directory..."

rsync -uavz ${ICON_DIR}/run . --exclude='*.in' --exclude='.*' --exclude='standard_*'

ln -sf -t run/ ${ICON_DIR}/run/standard_*

ln -sf set-up.info run/SETUP.config

rsync -uavz ${ICON_DIR}/externals . --exclude='.git' --exclude='*.f90' --exclude='*.F90' --exclude='*.c' --exclude='*.h' --exclude='*.Po' --exclude='tests' --exclude='rrtmgp*.nc' --exclude='*.mod' --exclude='*.o'

rsync -uavz ${ICON_DIR}/make_runscripts .

ln -sf ${ICON_DIR}/data

ln -sf ${ICON_DIR}/vertical_coord_tables

fi |

To initialize the submodules locate at "icon-scc/externals" before running configuration as follows

$ cd /path/to/icon-scc $ git submodule init $ git submodule update |

After creating the new configuration file you can use the script icon_build_script to build ICON, as follows

# Replace ../config/dkrz/mistral.intel-17.0.6 in icon_build_script with the name of your new configuration f ile. # Load modules and set variables for libraries and compilers. $ module load intel/2021.3.0 $ MPI=hpcx-2.10.0 $ export HDF5_DIR=<path>/hdf5-1.12.1-$MPI $ export NETCDF_DIR=<path>/netcdf-4.8.1-$MPI # HPC-X was downloaded from the below link and compiled using Intel compiler 2021.3.0. module use <path>/hpcx-2.10.0/modulefiles module load hpcx export CC=mpicc export FC=mpif90 # Execute the build script. $ ./icon_build_script |

The executable file of ICON will be created to /path/to/icon-scc/build/bin. HPC-X is available from Nvidia, https://developer.nvidia.com/networking/hpc-x .

Interactive Mode Test Example

In order to test your ICON-build you can run a first small experiment in interactive mode. For this change to directory interactive_run and execute ../bin/icon on the command line

$ cd /path/to/icon-scc/interactive_run $ ../build/bin/icon |

When the run finishes successfully, you will see the “Timer report” printed at the end of stdout and the file finish.status containing the string “OK”

Timer report

------------------------------- ------- ------------ ------------ ------------ ------------- ------------- -------------

name # calls t_min t_avg t_max total min (s) total max (s) total avg (s)

------------------------------- ------- ------------ ------------ ------------ ------------- ------------- -------------

total 1 03m29s 03m29s 03m29s 209.619 209.619 209.619

L solve_ab 240 0.15084s 0.26483s 27.0229s 63.559 63.559 63.559

L ab_expl 240 0.09686s 0.09740s 0.10715s 23.375 23.375 23.375

L extra1 240 0.03408s 0.03435s 0.03754s 8.244 8.244 8.244

L extra2 240 0.02285s 0.02301s 0.02559s 5.523 5.523 5.523

L extra3 240 0.00792s 0.00799s 0.00887s 1.917 1.917 1.917

L extra4 240 0.03187s 0.03204s 0.03514s 7.690 7.690 7.690

L ab_rhs4sfc 240 0.01947s 0.01957s 0.02058s 4.697 4.697 4.697

L triv-t init 1 0.00013s 0.00013s 0.00013s 0.000 0.000 0.000

... |

Batch Mode Test Example

For running an experiment in batch mode you first need to create the appropriate run script. You can do this by executing the script make_runscripts in ICON’s root directory. The experiment you can prepare and run is named test_ocean_omip_10days and the template with the experiment specific data already exists.

$ cd /path/to/icon-scc/build $ /path/to/icon-scc/make_runscripts -s test_ocean_omip_10days |

Now an experiment script named exp.test_ocean_omip_10days.run should exist in the run directory. You should add Slurm directives to this script and adjust # nodes and procs per node before submitting a job.

$ cd /path/to/icon-scc/build/run $ sbatch exp.test_ocean_omip_10days.run |

if the run was successful you will see at the end of the log file, after the “Timer report”, the message

============================

Script run successfully: OK

============================

Download the input data from : TBD

The input data contain the 3 files: restart.tar.gz, hd.tar.gz and grids.tar.gz

Unpack them into the directory, /path/to/icon-scc/build/data.

Configuration of the experiment.

Go to the directory where the configuration files of the experiment are located:

$ cd /path/to/icon-scc/build/run/standard_experiments In file DEFAULT.config on line 33 set MODEL_ROOT to the directory, where you have icon-scc installed with plenty of space for IP. Set EXP_SUBDIR = build/$EXPERIMENTS_SUBDIR/$EXP_ID on line 46. In file scc.config on line 6 you have to set your ACCOUNT. The file scc.config also contains the tunables described in the next paragraph. |

Go to the main directory of ICON and run the experiment script:

$ cd /path/to/icon-scc $ pip install --user six jinja2 $ ./RUN_ISC_SCC_EXPERIMENT If you encounter the below error, add "export mpi_total_procs=1" to the script as a workaround and rerun it. Oops: missing option "mpi_total_procs" in interpolation while reading key 'use_mpi_startrun' $ $ ./RUN_ISC_SCC_EXPERIMENT |

Here is a sample RUN_ISC_SCC_EXPERIMENT.

#!/bin/bash

set -evx

ICON_DIR=$PWD

module use $ICON_DIR/etc/Modules

module add icon-switch

CO2=2850

EXP_ID=exp_scc${CO2}

cd $ICON_DIR/build/run

export mpi_total_procs=1

$ICON_DIR/utils/mkexp/mkexp standard_experiments/scc.config CO2=${CO2} |

Here is how to execute ICON with SCC data:

Go to /path/to/icon-scc/build/experiments/exp_scc2850/scripts

You can remove “#SBATCH --account=…” from all slurm scripts.

Modify exp_scc2850.run_start script

Add/change Slurm directives

Check # nodes, nproc and mpi_procs_pernode values

Add a path where CDO executable is located, such as "export PATH=$BUILD_DIR/cdo-1.9.10/bin:$PATH"

If you get the below error, add "-L" to cdo commands.

Ocdo settaxis (Warning): Use a thread-safe NetCDF4/HDF5 library or the CDO option -L to avoid such errors.

If you want to replace srun with mpirun, set START variable using mpirun.

export START="mpirun -np $SLURM_NPROCS <mpi flags>"

Submitting slurm jobs within a compute node is not allowed on Niagara cluster so modify the sbatch command as follows: sbatch exp_scc2850.post $start_date ==> ./exp_scc2850.post $start_date |

Modify the name of Slurm partition in exp_scc2850.post and comment out the last line, subprocess.check_call(['sbatch', 'exp_scc2850.mon', str(start_date)])

Finally, submit the job.

$ sbatch exp_scc2850.run_start

Sample output:

2022-01-21T22:30:51: Timer report, ranks 0-119 2022-01-21T22:30:51: 2022-01-21T22:30:51: ------------------------------- --------- ------------ -------- ------------ ------------ -------- ------------- -------------- ------------- -------------- ------------- 2022-01-21T22:30:51: 2022-01-21T22:30:51: name # calls t_min min rank t_avg t_max max rank total min (s) total min rank total max (s) total max rank total avg (s) 2022-01-21T22:30:51: 2022-01-21T22:30:51: ------------------------------- --------- ------------ -------- ------------ ------------ -------- ------------- -------------- ------------- -------------- ------------- 2022-01-21T22:30:51: 2022-01-21T22:30:51: 2022-01-21T22:30:51: total 120 17m58s [32] 17m58s 17m58s [0] 1078.334 [32] 1078.559 [0] 1078.337 2022-01-21T22:30:51: 2022-01-21T22:30:51: L wrt_output 1440 0.04708s [16] 0.06852s 4.6983s [0] 0.782 [32] 5.301 [0] 0.822 2022-01-21T22:30:51: 2022-01-21T22:30:51: L integrate_nh 4204800 0.02318s [2] 0.03009s 4.5782s [16] 1042.609 [0] 1055.506 [14] 1054.241 2022-01-21T22:30:51: 2022-01-21T22:30:51: L nh_solve 37843200 0.00080s [0] 0.00111s 4.5101s [36] 302.543 [0] 358.653 [81] 350.879 2022-01-21T22:30:51: 2022-01-21T22:30:51: L nh_hdiff 4204800 0.00023s [80] 0.00031s 0.00544s [110] 10.110 [6] 12.127 [91] 10.969 2022-01-21T22:30:51: 2022-01-21T22:30:51: L transport 4204800 0.00153s [80] 0.00197s 0.00930s [1] 68.317 [81] 69.998 [95] 69.160 2022-01-21T22:30:51: 2022-01-21T22:30:51: L iconam_echam 4204800 0.01114s [83] 0.01731s 0.25728s [32] 605.278 [22] 607.797 [30] 606.650 2022-01-21T22:30:51: ... 2022-01-21T22:30:52: OK 2022-01-21T22:30:52: ============================ 2022-01-21T22:30:52: Script run successfully: OK 2022-01-21T22:30:52: ============================ |

The output of the experiment is located in directory

$ /path/to/icon-scc/build/experiments/exp_scc2850/outdata |

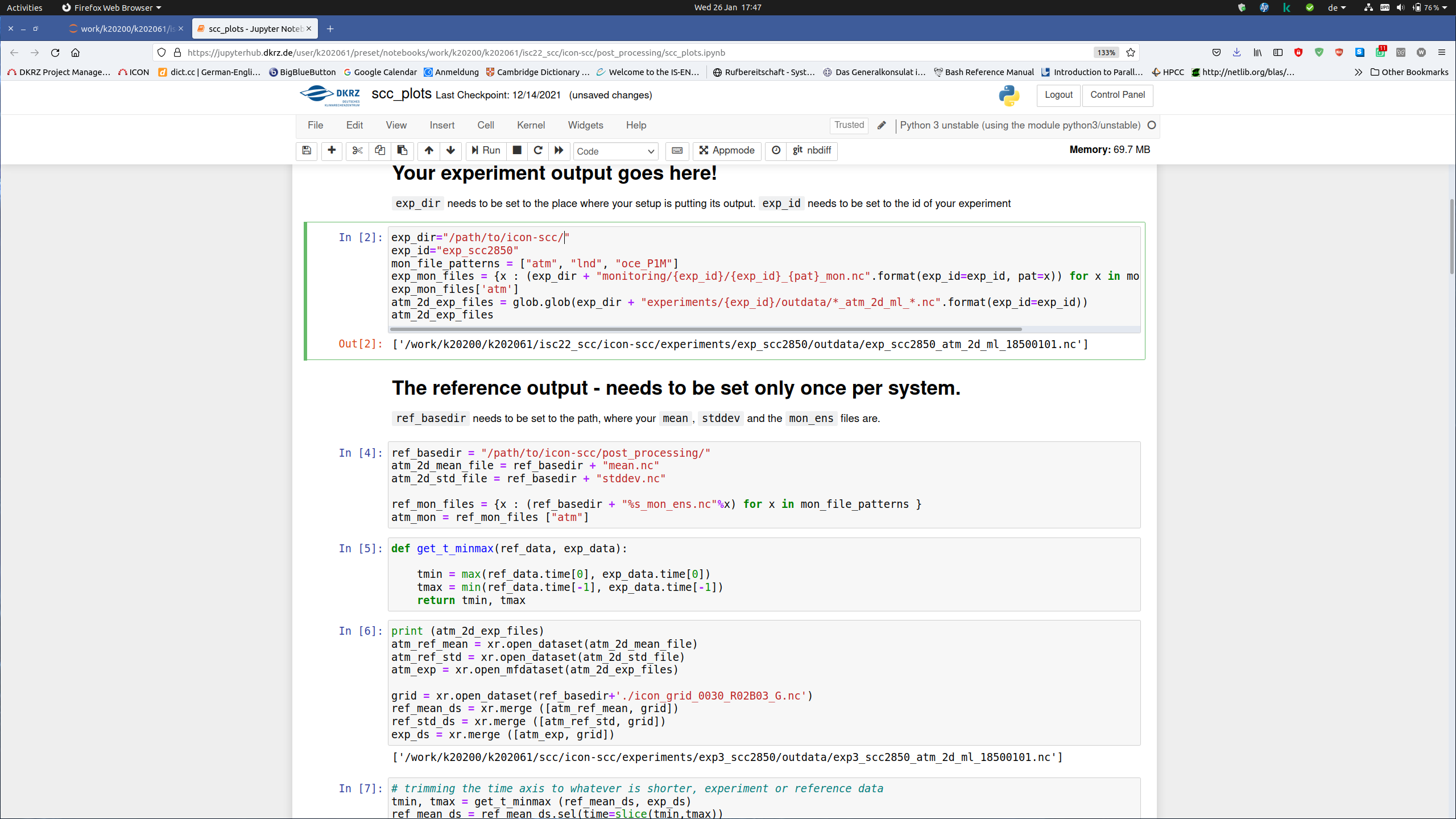

For post processing open the scc_plots.ipynb notebook in jupyterhub. The file scc_plots.ipynb is located in

$ /path/to/icon-scc/build/post_processing |

Adapt exp_dir, exp_id and ref_basedir in the notebook, and run it from top to bottom.

exp_dir="/path/to/icon-scc" exp_id="exp_scc2850" ref_basedir="/path/to/icon-scc/post_processing" |

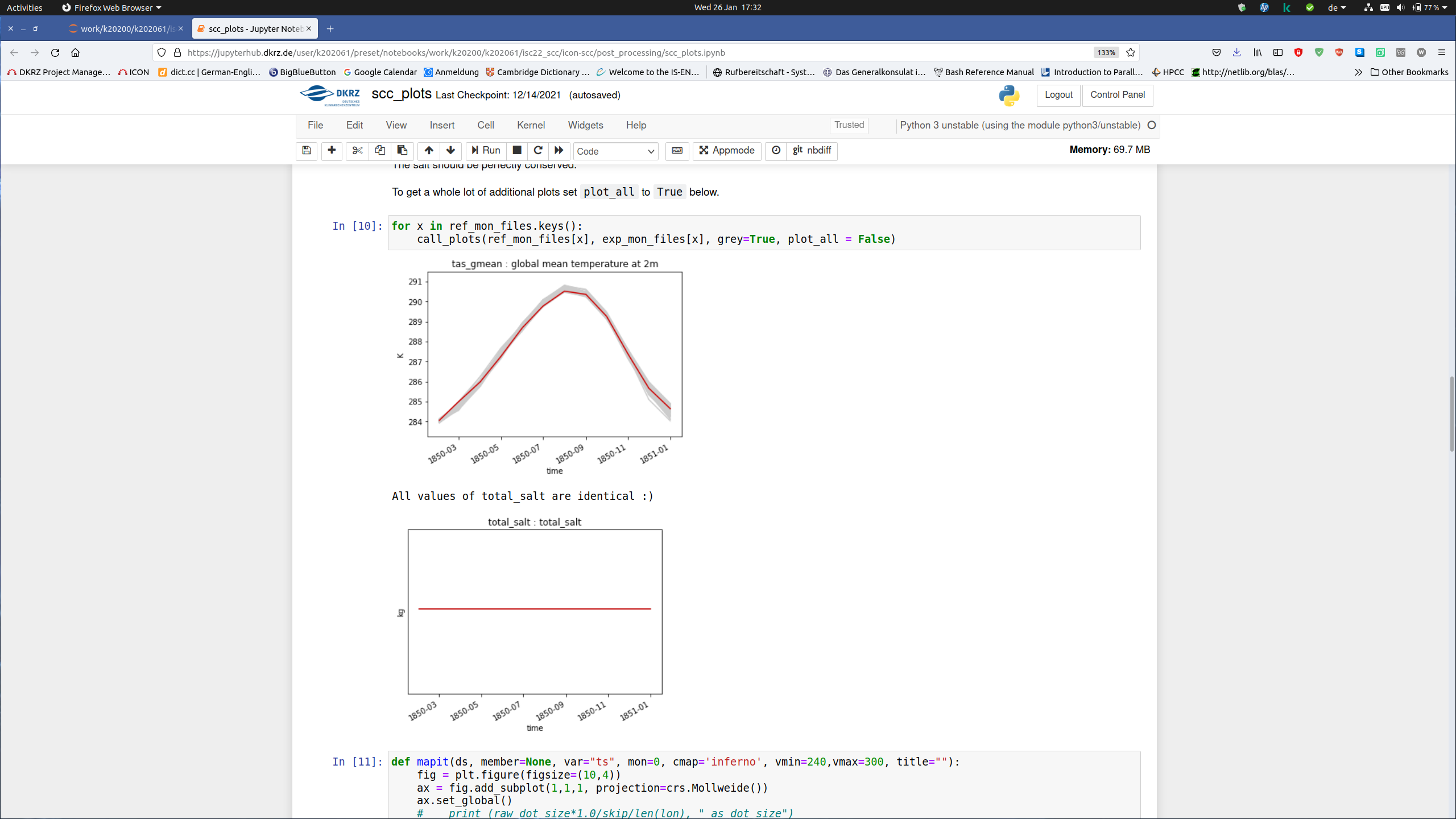

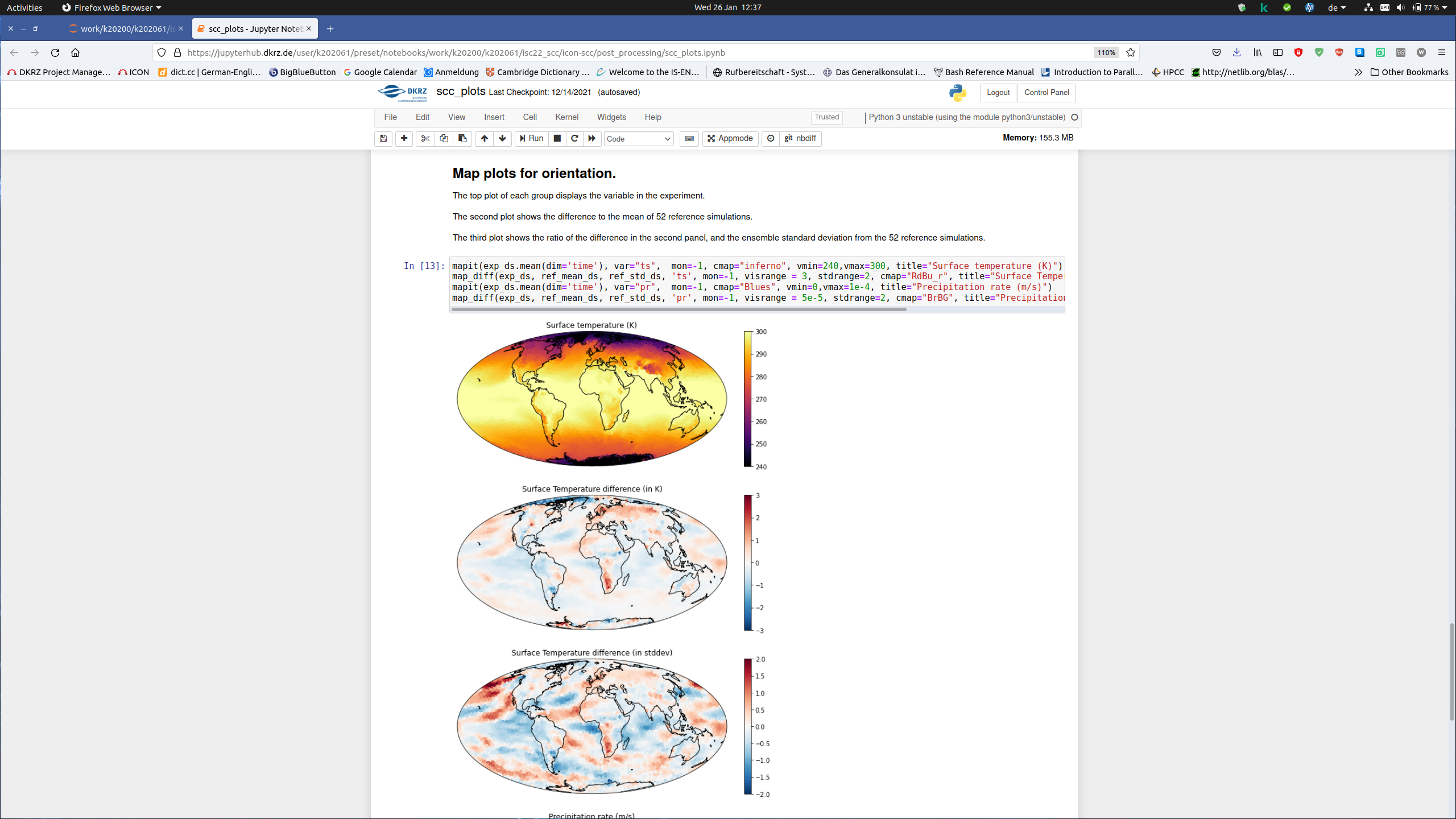

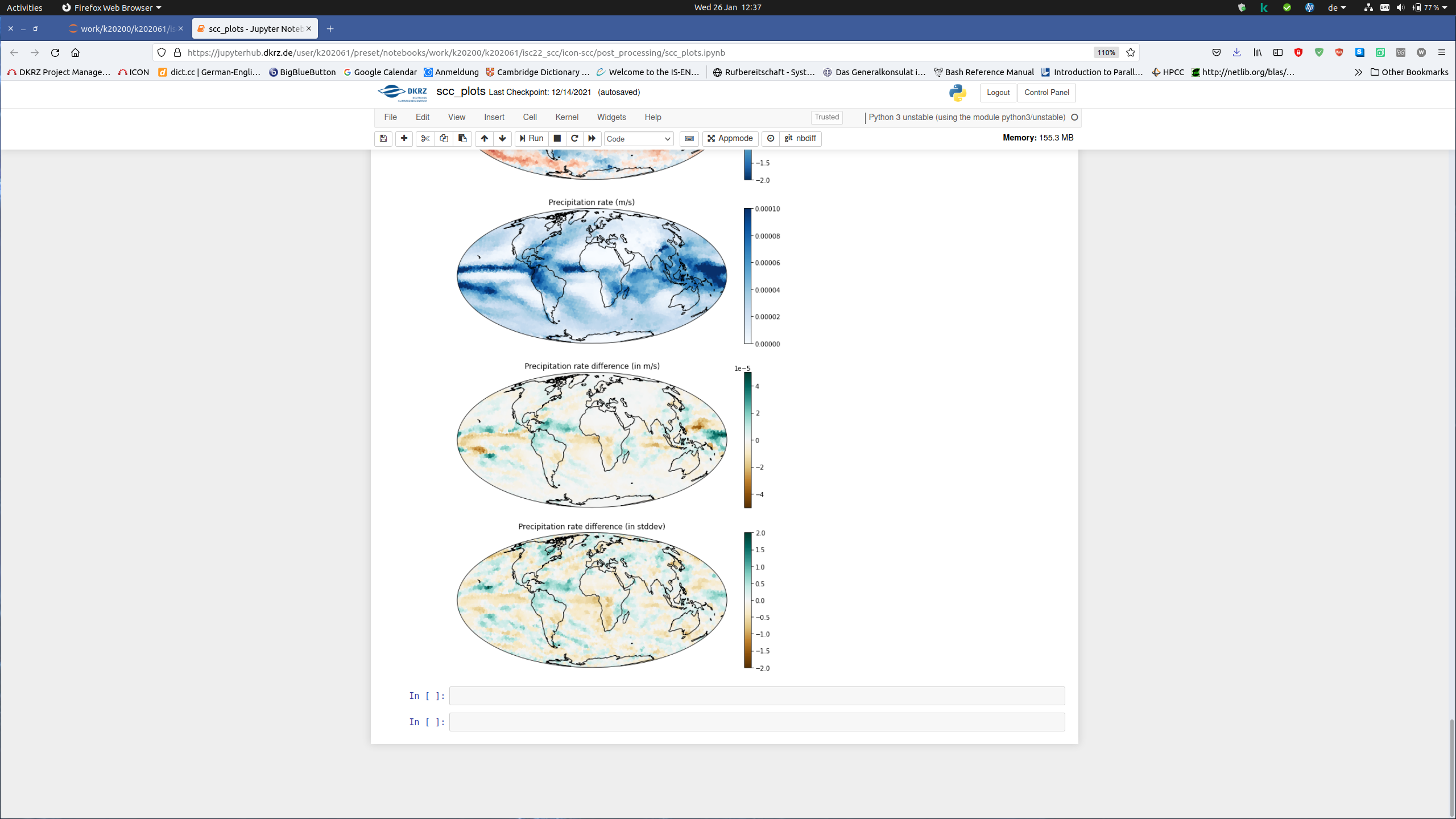

This evaluation script generates 2 line plots, which are used to check the correctness of the results. The global mean temperature at 2m and the total salinity as shown in the following picture

The line plot of the global mean temperature should show a red line rising and falling again in front of a grey outline indicating the 50 simulations we performed with this setup and slightly modified CO2 concentrations (basically playing the butterfly for the butterfly effect).

The plot of the total ocean salt content should be absolutely flat. This must not change in the model. If it does, something went totally wrong. Both criteria must be fulfilled.

In the lower part, you will find 6 maps, just for your orientation.

The first one shows the time mean temperature (273.15K is 0ºC, or 32ºF). You should see warm tropics, cold poles, and mountain ranges indicated by lower temperatures due to the altitude’s effect on temperatures. The second and third plot show the difference between your run and the mean of the 50 member ensemble, so basically the effect of your butterfly.

The lowest three plots show precipitation (differences). The first one should show strong precipitation in the tropics, a dry Sahara region, and fairly dry conditions in Siberia and Antarctica.

The pictures that come with the script are pretty typical of what you would expect, but don’t expect to exactly reproduce them.

In the run script there are the following parameters, which can be used to tune the performance of the experiment run

nproma : is a constant, defined during runtime. It specifies the blocking length for array dimensioning and inner loop lengths. The arrays in ICON are organized in blocks of length nproma size. Each block occupies a continuous chunk of memory. A block of nproma size can be processed as one vector by vector processors, or by one thread in a threaded environment. nproma is also the length of the innermost loops and it can be used for achieving better cache blocking. Typical values are nproma=16 for the actual experiment on Intel Broadwell CPU and in case of vector processors it depends on the length of the vector registers. On NEC Aurora you can try nproma=256 or nproma=512. For details about the ICON grid data and loop structures refer to section 2 of the ICON Grid Documentation in the icon_grid.pdf below. The nproma for the atmosphere can be set on line 21and the nproma for the ocean on line 24 in the file

/path/to/icon-scc/build/run/standard_experiments/scc.config |

mpi_oce_nodes/mpi_oce_procs : The experiment you are running is a coupled atmosphere ocean simulation. Per default we have assigned 1 node for the ocean and 3 nodes for the atmosphere. For better load balancing you can adjust the number of MPI tasks used by atmosphere and ocean. This is done by changing the value of the parameter mpi_oce_nodes. The number of nodes assigned for the atmosphere is then total_nodes - mpi_oce_nodes. Note that ocean_nodes defines the number of nodes and not the number of MPI_Tasks. The corresponding sub-model will take the whole number of MPI_Tasks of the node. You can set this parameter on line 14 in the file

/path/to/icon-scc/build/run/standard_experiments/scc.config |

If you do not want to assign the ocean a full node and prefer to set the number of MPI_tasks on your own, you can use the parameter mpi_oce_procs in the run script exp_scc2850.run_start for this purpose.

A more fine grained load balancing can be done by proper process placement. In case of slurm the srun command has the option “ --distribution=<block | cyclic[:block|cyclic]> ” , which can be used to distribute the MPI_tasks across sockets, within a node, and across nodes. As an example refer to the slides in the file slurm_process_placement.pdf . You can set the distribution on line 10 in the file

/path/to/icon-scc/build/run/standard_experiments/scc.config |

on Bridges-2 cluster, copy the input files from the following location:

/ocean/projects/cis210088p/davidcho/Icon-input

to your home directory.

[maor@bridges2-login011 ~]$ ll /ocean/projects/cis210088p/davidcho/Icon-input total 8458004 -rw-r--r-- 1 davidcho cis210088p 8356853152 Mar 1 13:06 grids.tar.gz -rw-r--r-- 1 davidcho cis210088p 451058 Mar 1 13:06 hd.tar.gz -rw-r--r-- 1 davidcho cis210088p 303674079 Mar 1 13:06 restart.tar.gz [maor@bridges2-login011 ~]$ cp /ocean/projects/cis210088p/davidcho/Icon-input . |

On Niagara cluster, copy the input files from the following location

/scratch/i/iscscc-scinet/davidcho01/Icon-input

to your home directory.

GOAL : is to speedup the execution time of this experiment setup by porting and tuning ICON to the newer architectures of Niagara and Bridges-2 cluster. Run ICON with the coupled atmosphere ocean experiment as described in section “Running ICON for the competition” and submit the results, assuming 4 node cluster. The simulated time is 1 model year and the reference Wallclock time is 30 minutes. Your main task is to beat the reference time, i.e. make ICON run 1 simulated year in less than 30 minutes.

Porting and tuning for specific processor architecture . For single node/processor performance optimization the parameter nproma can be used to tune the model towards better vectorization (AVX2/AVX512).

Load Balancing: Upon successful completion you will see in the log file 2 “Timer reports”, one for the atmosphere and one for the ocean. By inspecting these reports identify the most CPU time consuming parts. The timer coupling_1stget gives an indication of the extent of load imbalance between atmosphere and ocean. Can you think of a better load balancing scheme?

Run IPM profile for the application and submit the results as PDF. Based on this profile make an analysis of the MPI-Communication pattern. What are the main bottlenecks ?

Your optimizations need to yield acceptable results. Check the correctness of your results by using the python script scc_plots.ipynb, as described above in the section “Postprocessing”.

Files to be returned are the results in the subdirectory outdata of your experiment and your scc_plots.ipynb. The profiles you have produced and your analysis in a PDF document.

In case the team have a twitter account publish the figure (or video) with the hashtags: #ISC22, #ISC22_SCC (mark/tag the figure or video with your team name/university).