This page is an overview, configuration and tasks for ISC22 Student Cluster Competition teams.

NWChem is a widely used open-source computational chemistry software package, written primarily in Fortran. It supports scalable parallel implementations of atomic orbital and plane-wave density-function theory, many-body methods from Moller-Plesset perturbation theory to coupled-cluster with quadruple excitations, and a number of methods for computing multiscale methods and molecular and macroscropic properties. It is used on computers from Apple M1 laptops to the largest supercomputers, supporting multicore CPUs and GPUs with OpenMP and other programming models. NWChem uses MPI for parallelism, usually hidden by the Global Arrays programming model, which uses one-sided communication to support a data-centric abstraction of multidimensional arrays across shared and distributed memory protocols. NWChem was created by Pacific Northwest National Laboratory in the 1990s, and has been under continuous development by a team based on national laboratories, universities, and industry for 25 years. NWChem has been cited thousands of times and was a finalist for the Gordon Bell Prize in 2009.

The slides:

NWChem is available for download from GitHub:

git clone https://github.com/nwchemgit/nwchem cd nwchem/src/tools && ./get-tools-github |

Once you have downloaded NWChem, the environment needs to be set up before building a working executable for your cluster. In the following example, we show how to compile it using Intel compilers, the Intel Math Kernel Library (MKL) and Open MPI from HPC-X:

module load intel/2021.3 module load mkl/2021.3.0 module load hpcx/2.9.0 export NWCHEM_TOP=/path-to-directory-where-you-ran-git/nwchem export NWCHEM_TARGET=LINUX64 export ARMCI_NETWORK=ARMCI-MPI export USE_MPI=y export NWCHEM_MODULES=qm export BLASOPT="-mkl" export BLAS_SIZE=8 export LAPACK_LIB="-mkl" export USE_SCALAPACK=y export SCALAPACK="-mkl -lmkl_scalapack_ilp64 -lmkl_blacs_openmpi_ilp64" export SCALAPACK_SIZE=8 export FC=ifort |

Full details can be found in https://nwchemgit.github.io/Compiling-NWChem.html

Using ARMCI-MPI requires the user to build this prior to compiling NWChem. This requires you to have the Linux packages autoconf, automake, m4 and libtool installed. If they aren’t present and you can’t install them via a package manager, ARMCI-MPI provides a link to instructions on how to do it manually.

cd $NWCHEM_TOP/src/tools ./install-armci-mpi |

This step should succeed, but if it does not, it means that mpicc and $MPICC are not available in your environment. Note also that you need to make sure that LIBMPI matches the output of mpifort -show for NWChem to link correctly.

After the environment has been set up, NWChem is built in two steps: building the configuration file nwchem_config.h, and making the executable.

cd $NWCHEM_TOP/src make nwchem_config make -j10 |

The last make command takes a while to complete; when it is done, there should be an executable called nwchem under $NWCHEM_TOP/bin/$NWCHEM_TARGET.

Once you have an executable, running NWChem is as simple as choosing a suitable input and running it with your chosen MPI application launcher:

mpirun -np 4 $NWCHEM_TOP/bin_armci-mpi/LINUX64/nwchem $NWCHEM_TOP/web/benchmarks/dft/siosi3.nw |

The above (small) test problem runs in about 20 seconds in one node of the HPC-AI Advisory Council’s helios cluster. If your test goes well, the last line of your output should look as follows:

Total times cpu: 18.0s wall: 20.3s |

(Except for the values in the very last line, of course.)

The performance metric you will be trying to minimize is the wall time in the last line.

There are a number of tunable parameters that affect the performance of NWChem. One of them is the MPI library used, and how Global Arrays uses MPI. Unlike many HPC codes, Global Arrays is based on a one-sided communication model - not message-passing - and thus is sensitive to implementation details, like RDMA and asynchronous progress. Another key issue is parallel execution configuration. NWChem often runs well with flat MPI parallelism, although with larger core counts and/or smaller memory capacities, a mixture of MPI and OpenMP can improve performance of some modules.

Both of the above do not require source code changes. Additional tuning opportunities exist if one modifies the source code of bottleneck kernels, especially to make better us of fine-grain parallelism, either on CPUs or GPUs.

All of these issues will be described in the following sections.

If you would like to understand more about how NWChem works, https://www.nersc.gov/assets/Uploads/Hammond-NERSC-OpenMP-August-2019-1.pdf may be useful.

The short version of tuning MPI and GA in NWChem is:

Try ARMCI_NETWORK=ARMCI-MPI, ARMCI_NETWORK=MPI-PR, and ARMCI_NETWORK=MPI-TS Which one performs better varies by use case. Note that you must launch the MPI-PR binary with more than 1 MPI process, because the runtime devotes one MPI process to communication. The other two implementations allow binaries to run with one MP process. Please remember this when computing parallel efficiency: N vs N-1 is significant for smaller N.

Try at least two different MPI libraries, particularly with ARMCI-MPI. The performance of ARMCI-MPI with Open-MPI, Intel MPI and MVAPICH2 will often be noticeably different.

There are more ways to tune NWChem than these, but these are the straightforward ones for you to consider first.

The following contains the full details of what goes on inside of Global Arrays. You do not need to study all of it. The important parts to understand are:

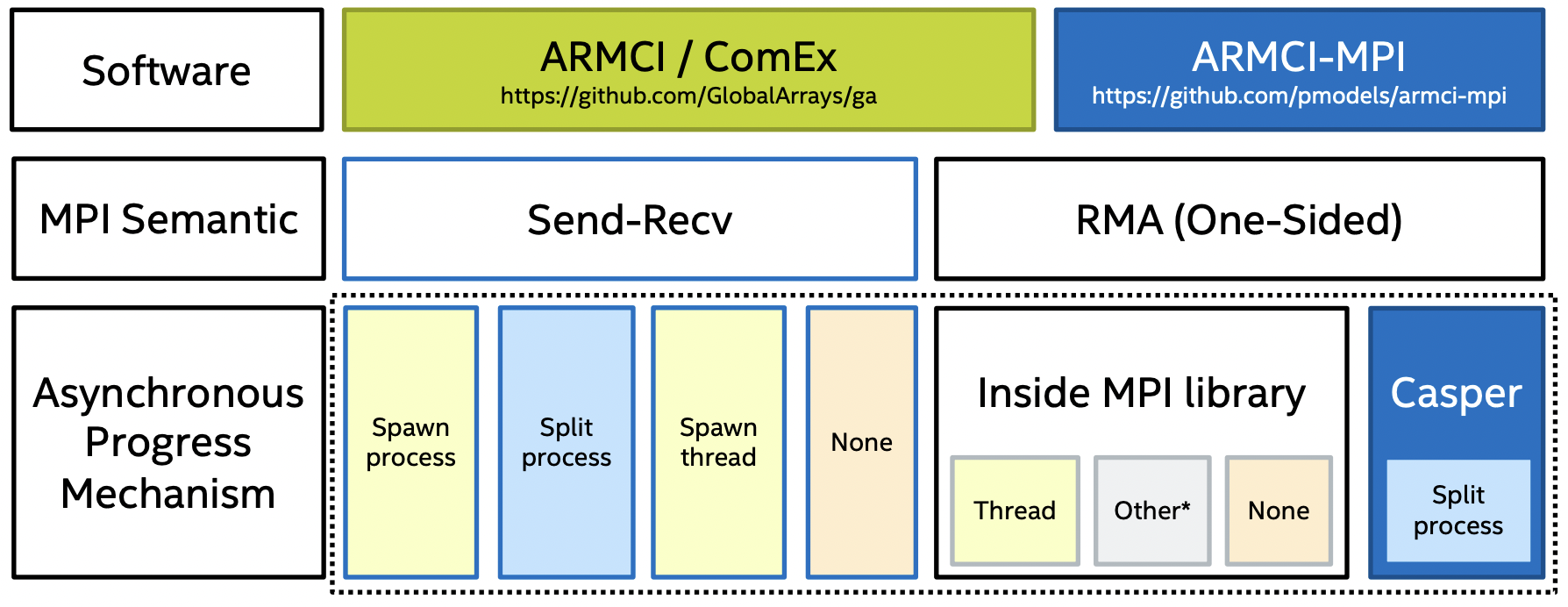

There are two implementations of the ARMCI API: the ARMCI/ComEx library distributed with Global Arrays, and the ARMCI-MPI library distributed separately.

Global Arrays can use Send-Recv or RMA (one-sided communication) internally to implement it’s one-sided operations. While mapping one-sided to one-sided is more natural, some MPI libraries implement Send-Recv much better than RMA, in which case, the less natural mapping of one-sided communication to message-passing is more effective.

The performance of different MPI libraries can vary significantly for the communication patterns in NWChem. The choice of MPI library is an important tunable parameter for NWChem.

The presence of OpenMP in a few modules of NWChem presents an opportunity for tuning. Both of the CCSD(T) modules (TCE and semidirect) contain OpenMP in the bottlenecks.

Assuming you have 64 cores, you can run 64 MPI processes and 1 OpenMP thread (i.e. 64x1) all the way to 1x64 (1 MPI process and 64 OpenMP threads). You will find that NWChem does not scale to more than 8 threads per process, because of Amdahl’s Law, as well as the NUMA properties of some modern servers. However, you may find that 16x4 is better than 64x1, for example. This is particularly true when file I/O is happening, because Linux serializes this. The CCSD(T) semidirect module does nontrivial file I/O in some scenarios, so it benefits from OpenMP threading even if the compute efficiency is imperfect.

Below is a simple example of a script that could be useful for performing scaling MPI x OpenMP scaling studies.

#/bin/bash

# total number of cores available

N=64

# we do not expect NWChem to scale to more than 8 threads per process

for P in $((N)) $((N/2)) $((N/4)) $((N/8)) ; do

T=$((N/${P}))

export OMP_NUM_THREADS=${T}

mpirun -n $P ...

done |

The CCSD(T) semidirect and TCE modules have at least one important tuning parameter.

The DFT module contains no OpenMP and has limited tuning options, most of which related to quantum chemistry algorithms that can be ignored for the SCC. The most important tuning options related to ARMCI and MPI library choice, as described above.

See https://nwchemgit.github.io/TCE.html#maximizing-performance for details. The most important tuning parameters are:

2eorb always use this

2emetset to 13 and see how that works

tilesizethe default for CCSD(T) should be 20. If you set it to a much larger value, the job will crash. Smaller values are usually less efficient. You can also try https://nwchemgit.github.io/TCE.html#ccsdtcr-eomccsdt-calculations-with-large-tiles instead and see if that helps performance.

The following settings may be useful:

ccsd

nodisk # regenerate integrals every iteration

# useful if the filesystem is slow relative to the CPU

end

set ccsdt:memlimit 8000 # this allows local caching

# of communication-intensive intermediates

set ccsd:use_ccsd_omp T # enables OpenMP in CCSD - not likely to pay off

set ccsd:use_trpdrv_omp T # enables OpenMP in (T) - always pays off

set ccsd:use_trpdrv_openacc T # enables OpenACC in (T) - VERY EXPERIMENTAL!!! |

Advanced users may attempt to improve the OpenMP code in CCSD for additional performance.

Please use https://github.com/NWChem/input-generator to generate NWChem input files for the competition. For example, you can generate the input files for 7 water molecules with the two CCSD(T) modules and 21 water molecules with the DFT module, together with the cc-pVTZ basis set, like this:

./make_nwchem_input.py w7 rccsd-t cc-pvtz energy # semidirect ./make_nwchem_input.py w7 ccsd-t cc-pvtz energy # TCE ./make_nwchem_input.py w21 b3lyp cc-pvtz energy # DFT |

It is always a good idea to set permanent_dir and scratch_dir appropriately for your system. The former path needs to be a shared folder that all nodes can see. The latter can be a local private scratch, such as /tmp. Depending on your input, you may generate large files in both, so it is not a good idea to set them to slow and/or very limited filesystems.

For CCSD(T), a smaller molecule can be used for testing, because this method is more expensive. The w5 configuration runs in approximately 10 minutes on a modern server node. For debugging, use w1 or w2.

For the SCC, a good DFT benchmark problem for 64 to 128 cores is w21. The input file for this is generated as shown above. This should run in approximately 10 minutes on 40 cores of a modern x86 server, and should scale to 4 nodes, albeit imperfectly. For debugging, teams can use a much smaller input, such as w5, while for performance experiments, w12 is a decent proxy for w21 that runs in less time.

Run the application with the given input file and submit the results, assuming a 4-node cluster. Run on both Niagara and Bridges-2 using 4 CPU-only nodes.

a. Semidirect CCSD(T)

The input file that follows (generated with ./make_nwchem_input.py w7 rccsd-t cc-pvtz energy) is the one that must be used. The only lines that you can change are noted as such.

echo start w7_rccsd-t_cc-pvtz_energy memory stack 1000 mb heap 100 mb global 1000 mb noverify # you can change this permanent_dir . # you can change this scratch_dir /tmp # you can change this geometry units angstrom O -0.46306507 -2.84560143 0.34712980 H -0.31185448 -3.74723642 0.05234746 H -0.60259983 -2.31575342 -0.47038548 O 1.84489062 0.25067396 -1.20027152 H 2.63515099 0.41824386 -1.72121662 H 1.46223315 1.13476086 -0.99006607 O -0.36089385 1.06267739 1.87503037 H -0.44058089 1.21621663 2.82039947 H 0.24249561 0.27291766 1.77033787 O 1.25770268 -0.94282108 1.34833194 H 0.73624113 -1.73036585 1.09041584 H 1.67299069 -0.65240393 0.51959506 O 0.51098073 2.46987019 -0.40854717 H 0.33154661 2.21905129 0.51484431 H -0.36738878 2.41346155 -0.80745002 O -0.68279808 -1.06009111 -1.69106587 H -1.23658236 -0.35698007 -1.31005956 H 0.20346941 -0.66506167 -1.72575016 O -2.06529537 1.02745574 -0.29419144 H -1.67380330 0.97232115 0.60004746 H -3.01776458 0.99606215 -0.16747231 end basis "ao basis" spherical noprint * library cc-pvtz end scf semidirect memsize 100000000 filesize 0 # you can change this singlet rhf thresh 1e-7 maxiter 100 noprint "final vectors analysis" "final vector symmetries" end ccsd freeze atomic thresh 1e-6 maxiter 100 #nodisk # you can change this end set ccsd:use_ccsd_omp F # you can change this set ccsd:use_trpdrv_omp T # you can change this task ccsd(t) energy |

The correct result is determined by comparison with the following. The last decimal might vary.

Total SCF energy: -532.46669473... Total CCSD energy: -534.373535... Total CCSD(T) energy: -534.43455715... |

This job should run in less than 5000 seconds of wall time on 4 nodes.

You should practice on the w5 input first, since it runs faster and allows easier experiments. The following are the reference energies for this configuration.

Total SCF energy: -380.329008048... Total CCSD energy: -381.684999... Total CCSD(T) energy: -381.72730035... |

b. TCE CCSD(T)

The input file that follows (generated with ./make_nwchem_input.py w7 ccsd-t cc-pvtz energy) is the one that must be used. The only lines that you can change are noted as such.

This input should produce the same answers as 1a but uses a completely different implementation, with different algorithms.

echo start w7_ccsd-t_cc-pvtz_energy memory stack 1000 mb heap 100 mb global 1000 mb noverify # you can change this permanent_dir . # you can change this scratch_dir /tmp # you can change this geometry units angstrom O -0.46306507 -2.84560143 0.34712980 H -0.31185448 -3.74723642 0.05234746 H -0.60259983 -2.31575342 -0.47038548 O 1.84489062 0.25067396 -1.20027152 H 2.63515099 0.41824386 -1.72121662 H 1.46223315 1.13476086 -0.99006607 O -0.36089385 1.06267739 1.87503037 H -0.44058089 1.21621663 2.82039947 H 0.24249561 0.27291766 1.77033787 O 1.25770268 -0.94282108 1.34833194 H 0.73624113 -1.73036585 1.09041584 H 1.67299069 -0.65240393 0.51959506 O 0.51098073 2.46987019 -0.40854717 H 0.33154661 2.21905129 0.51484431 H -0.36738878 2.41346155 -0.80745002 O -0.68279808 -1.06009111 -1.69106587 H -1.23658236 -0.35698007 -1.31005956 H 0.20346941 -0.66506167 -1.72575016 O -2.06529537 1.02745574 -0.29419144 H -1.67380330 0.97232115 0.60004746 H -3.01776458 0.99606215 -0.16747231 end basis "ao basis" spherical noprint * library cc-pvtz end scf semidirect memsize 100000000 filesize 0 # you can change this singlet rhf thresh 1e-7 maxiter 100 noprint "final vectors analysis" "final vector symmetries" end tce freeze atomic scf ccsd(t) thresh 1e-6 maxiter 100 2eorb 2emet 13 # you can change this (14 is another good option) attilesize 40 # you can change this, but it probably won't matter tilesize 16 # you can change this end set tce:nts T # you can change this - it impacts only the CCSD iterations set tce:xmem 1000 # you can change this - it impacts (T) algorithm and memory task tce energy |

c. Density functional theory

The input file that follows (generated with ./make_nwchem_input.py w21 b3lyp cc-pvtz energy) is the one that must be used. The only lines that you can change are noted as such.

echo start w21_b3lyp_cc-pvtz_energy memory stack 1000 mb heap 100 mb global 1000 mb noverify # you can change this permanent_dir . # you can change this scratch_dir /tmp # you can change this geometry units angstrom O 2.88102880 1.35617710 -1.35936865 H 2.58653411 2.28696609 -1.45609052 H 3.32093709 1.29343465 -0.49116710 O 0.32179453 0.62629846 -0.94851226 H 1.26447032 0.88843717 -1.07026883 H -0.21718807 1.36563667 -1.31919284 O -0.16007111 -1.63872066 -2.31840571 H 0.01823460 -0.80812840 -1.82332661 H 0.10093174 -2.33288028 -1.67894566 O -2.84753775 -0.70659788 1.22536498 H -2.82066138 -1.51379494 1.77020749 H -2.78672686 -1.02520290 0.29442139 O -1.28347305 2.64257815 -1.71714330 H -1.51430450 2.88443923 -0.79572381 H -2.09726640 2.24298480 -2.07172804 O -3.72092200 1.16535937 -2.05155378 H -3.96467209 1.40975690 -1.12274906 H -4.48373842 1.39703848 -2.59023807 O -2.75707813 -1.44066167 -1.41017665 H -3.20125609 -0.67001218 -1.80694890 H -1.88535531 -1.49090783 -1.86154221 O -2.18430745 -4.00503366 -0.11745556 H -2.58170529 -3.32141555 -0.67897787 H -1.24901286 -4.03122679 -0.38234526 O 3.76378263 0.84987648 1.25359107 H 4.64925014 0.99382151 1.60153466 H 3.59269678 -0.12230330 1.37734803 O 3.25939067 -1.73586556 1.54809767 H 2.38789220 -1.97346725 1.90398296 H 3.34047461 -2.24000005 0.71725832 O 0.47067808 -2.08139855 2.05406984 H -0.31565776 -2.51643817 2.42960695 H 0.20916882 -1.14318846 1.97673663 O 0.84341794 4.45219154 1.19543335 H 1.22169208 3.69174389 1.70700542 H 1.12534416 5.24681772 1.65820509 O 3.29073454 -3.06649080 -0.92760728 H 4.01092916 -3.62238167 -1.23916202 H 3.16110064 -2.37099393 -1.62514329 O -2.13311585 -3.15170259 2.41174304 H -2.19514114 -3.57941119 1.51889439 H -2.56149431 -3.75503610 3.02609742 O 1.49292018 3.74546296 -1.50486304 H 1.31323368 4.10353119 -0.61794466 H 0.61187301 3.56898981 -1.86464476 O -0.48284790 0.54531195 1.58871855 H -0.15462414 0.54530283 0.65343103 H -1.36928803 0.11358922 1.52480652 O 2.72236852 -1.18342053 -2.72120243 H 1.76763800 -1.29146509 -2.86748821 H 2.83269882 -0.26932242 -2.40792605 O 1.64182263 2.24659436 2.46745517 H 2.41153336 1.77257628 2.10024166 H 0.91471507 1.60671414 2.41570272 O -4.10080206 1.73028823 0.54526604 H -3.83492913 0.90816710 0.99225907 H -3.44369737 2.38297040 0.83399222 O -1.60618492 3.09549014 1.00019438 H -0.94029673 3.78712081 1.16680637 H -1.18360076 2.28420300 1.34174432 O 0.57200422 -3.46363889 -0.35742274 H 0.57764102 -2.95313709 0.47874423 H 1.51287263 -3.60041770 -0.56712755 end basis "ao basis" spherical noprint * library cc-pvtz end # you can remove this entire block if you want scf direct # you can change this singlet rhf thresh 1e-7 maxiter 100 vectors input atomic output w21_scf_cc-pvtz.movecs noprint "final vectors analysis" "final vector symmetries" end task scf energy ignore # /end thing you can remove dft direct # you can change this xc b3lyp grid fine iterations 100 vectors input w21_scf_cc-pvtz.movecs # remove this if you remove the block above noprint "final vectors analysis" "final vector symmetries" end task dft energy |

The correct result is determined by comparison with the following. The last decimal might vary.

Total SCF energy = -1597.43928 # OPTIONAL (only if you run SCF first) Total DFT energy = -1606.05998 Nuclear repulsion energy = 2617.217984618690 |

This job should run in less than 600 seconds of wall time on 40 cores. There is no GPU support for DFT so do not try to measure anything related to GPUs here.

2. Obtain an IPM profile for the application and submit the results as a PDF file (don’t submit the raw ipm_parse -html data.) What are the top three MPI calls used?

3. Visualize the results, create a figure or short video, and submit the results. In case the team has a Twitter account publish the figure or video with the hashtags: #ISC22, #ISC22_SCC, #NWChem (mark/tag the figure or video with your team name/university).

4. Run a 4-GPU job on the Bridge-2 cluster using V100 nodes, and submit the results (either Semidirect CCSD(T) or TCE CCSD(T)), you can submit both inputs, but only one is required (either one).